Storytelling and Reading

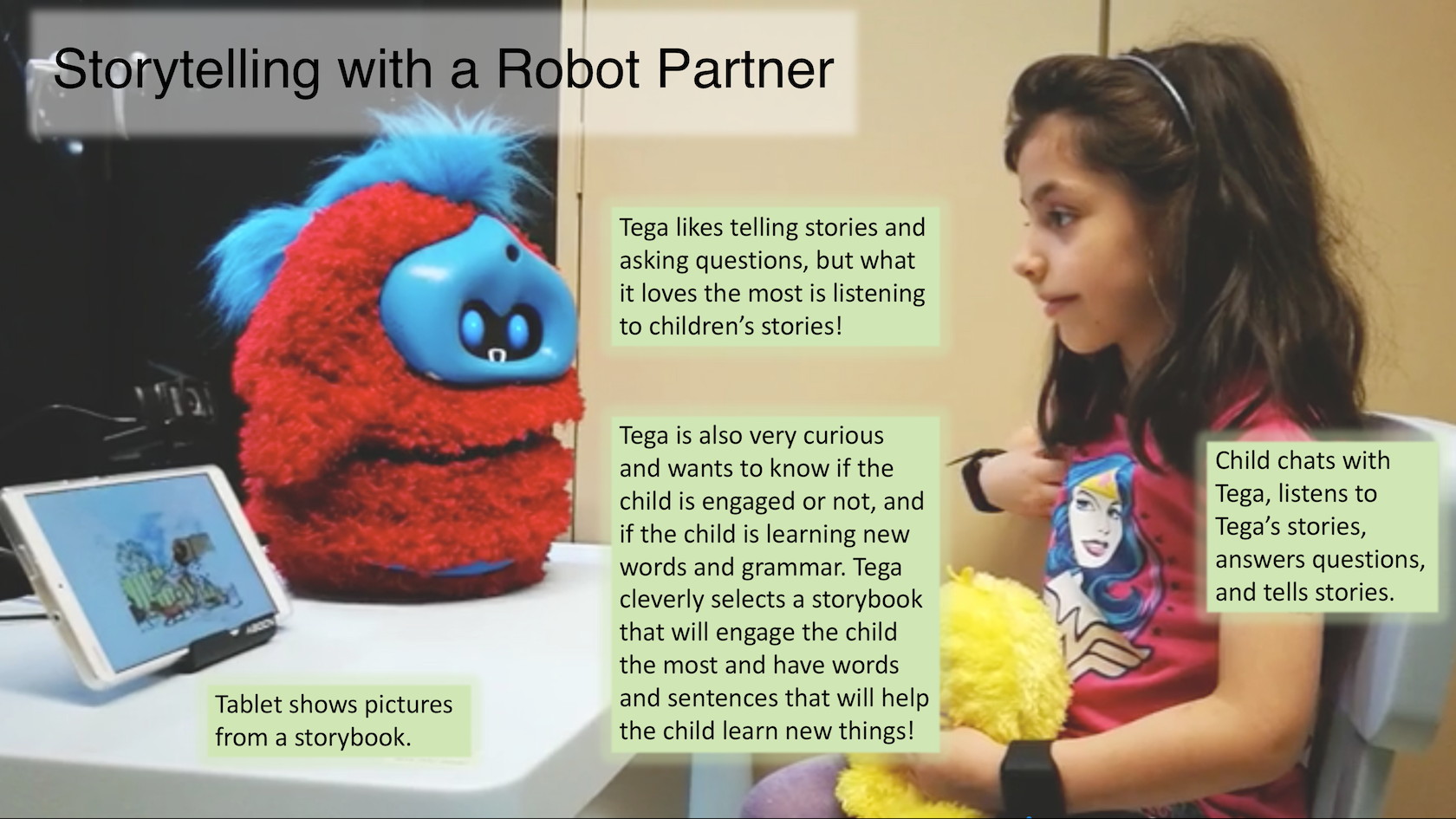

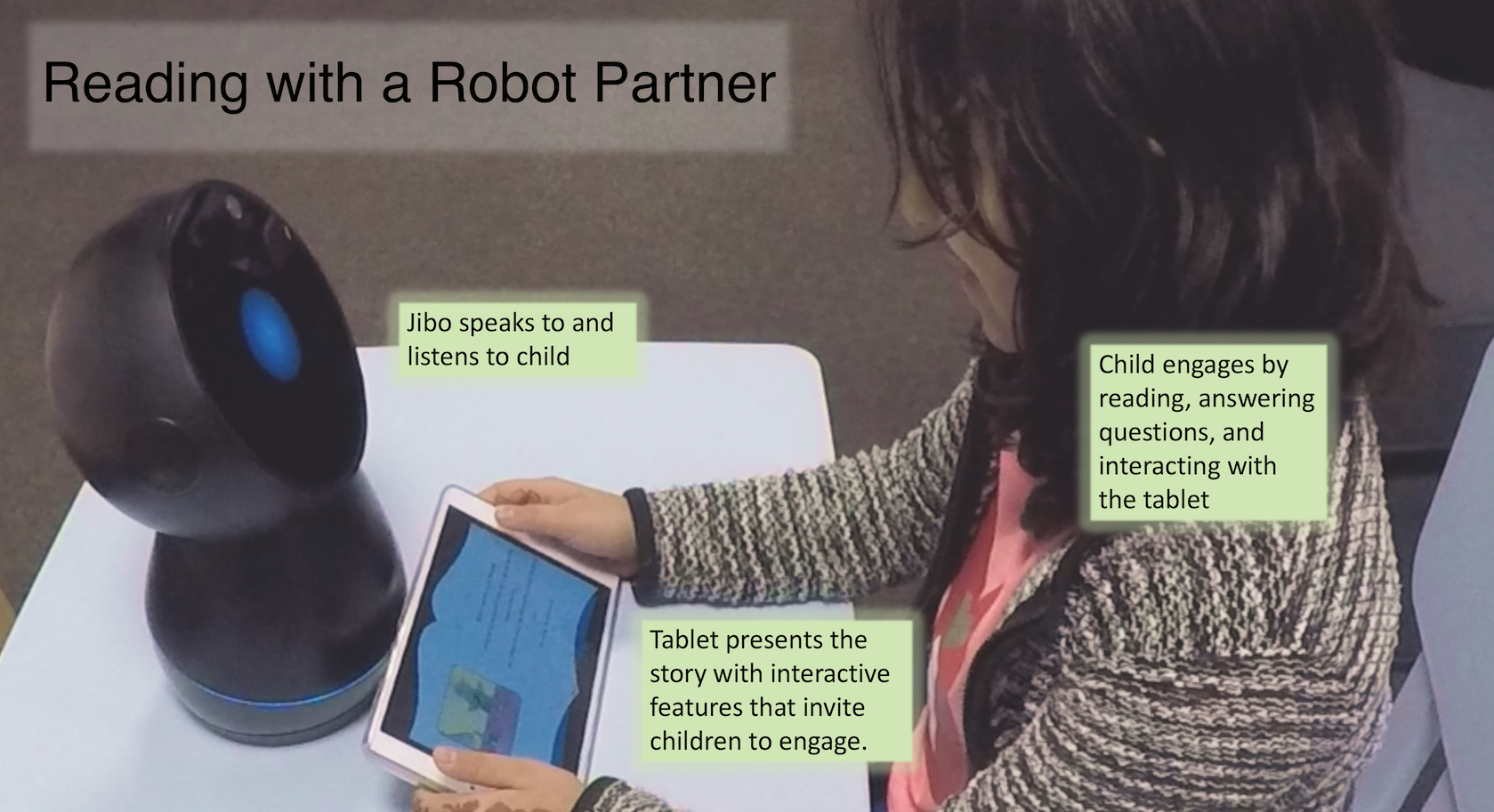

Our robots like to tell and read stories with children. When Tega and Jibo are listening to children’s stories, they can understand if the child learned a new word or a new sentence structure. When they are telling stories, they are curious to know if the child is engaged and having fun, so they will closely monitor the child’s emotions and attention. Tega and Jibo also asks questions to the child from time to time, to help children think and learn.

Our robots can think what storybook would help each child learn and practice new words and sentences. Every child learns and engages differently, so we want our robots to understand that.

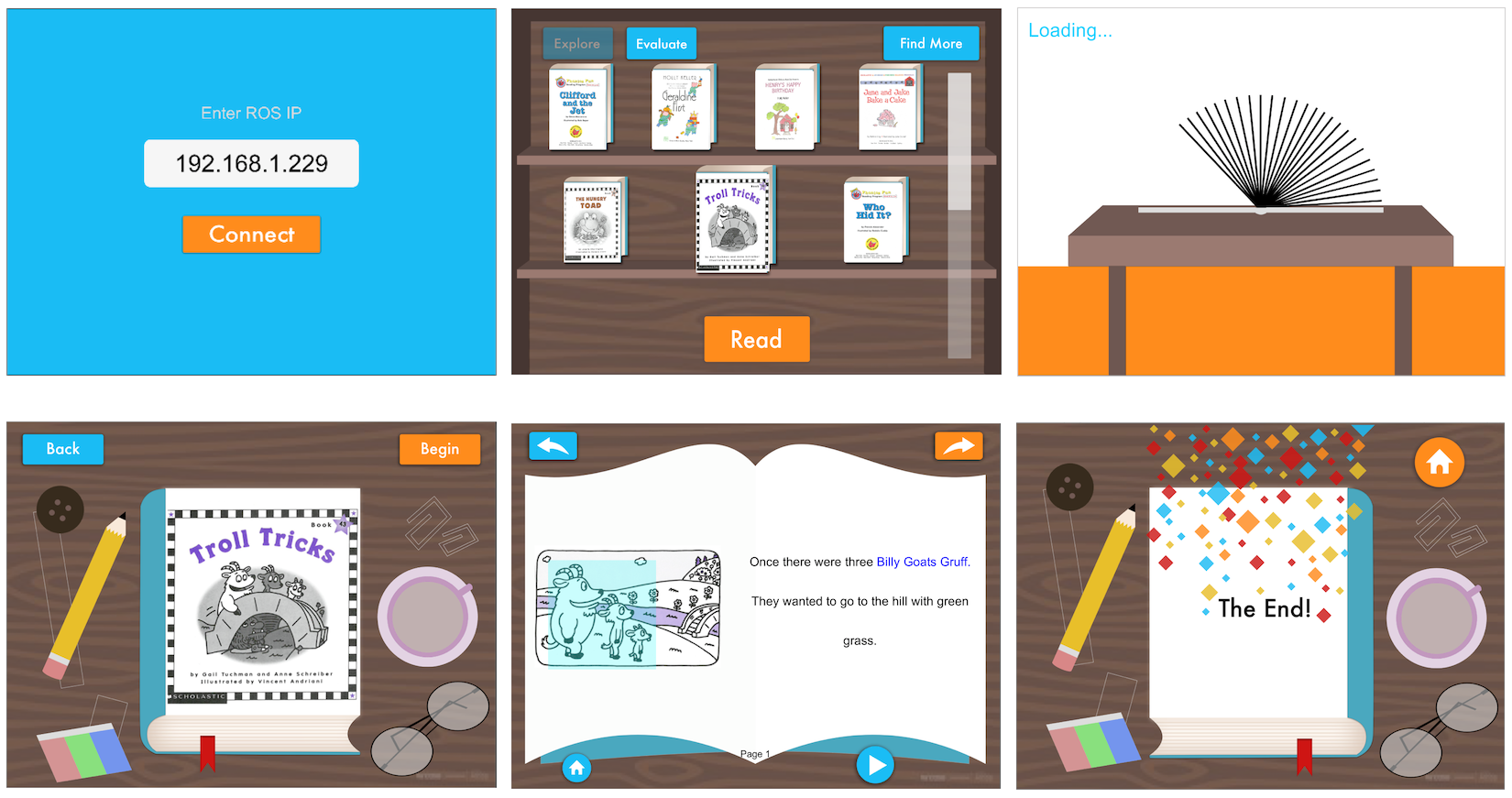

Here is the storybook on a tablet. Our robots don’t have hands to touch the screen like we do, but they can use their brain power to tell tablets what to do, like flipping pages! Isn’t that cool? The secret is, the robot and the tablet can talk to each other over Wifi.

Let’s look at the storybook again. You can click on the words and pictures on the storybook and it will read to you what the word is. Sometimes, Jibo and Tega might ask you, “what are the names of those goats?” and you can tell them “Billy Goats Gruff” or touch the words on the screen. You can also ask the robots, “Hey Jibo, how do you read this word?” and click on the word, and Jibo might help you read it, “Let me try, I think that word is grass!”.

Here is a video of Tega and children telling stories to each other. Like you do, robots get smarter and smarter every time they tell and listen to stories!

Selected Publications:

Hae Won Park, Ishaan Grover, Samuel Spaulding, Louis Gomez, and Cynthia Breazeal. “A Model-free Affective Reinforcement Learning Approach to Personalization of a Social Robot Companion for Early Literacy Education.” In Association for the Advancement of Artificial Intelligence (AAAI), 2019. [Download]

Hae Won Park, Mirko Gelsomini, Jin Joo Lee, and Cynthia Breazeal. “Telling stories to robots: The effect of backchanneling on a child’s storytelling.”, In ACM/IEEE International Conference on Human-Robot Interaction (HRI), 2017. [Download]